Hospital patients are at risk of a number of life-threatening complications, especially sepsis—a condition that can kill within hours and contributes to one out of three in-hospital deaths in the U.S. Overworked doctors and nurses often have little time to spend with each patient, and this problem can go unnoticed until it is too late.

Academics and electronic-health-record companies have developed automated systems that send reminders to check patients for sepsis, but the sheer number of alerts can cause health care providers to ignore or turn off these notices. Researchers have been trying to use machine learning to fine-tune such programs and reduce the number of alerts they generate. Now one algorithm has proved its mettle in real hospitals, helping doctors and nurses treat sepsis cases nearly two hours earlier on average—and cutting the condition’s hospital mortality rate by 18 percent.

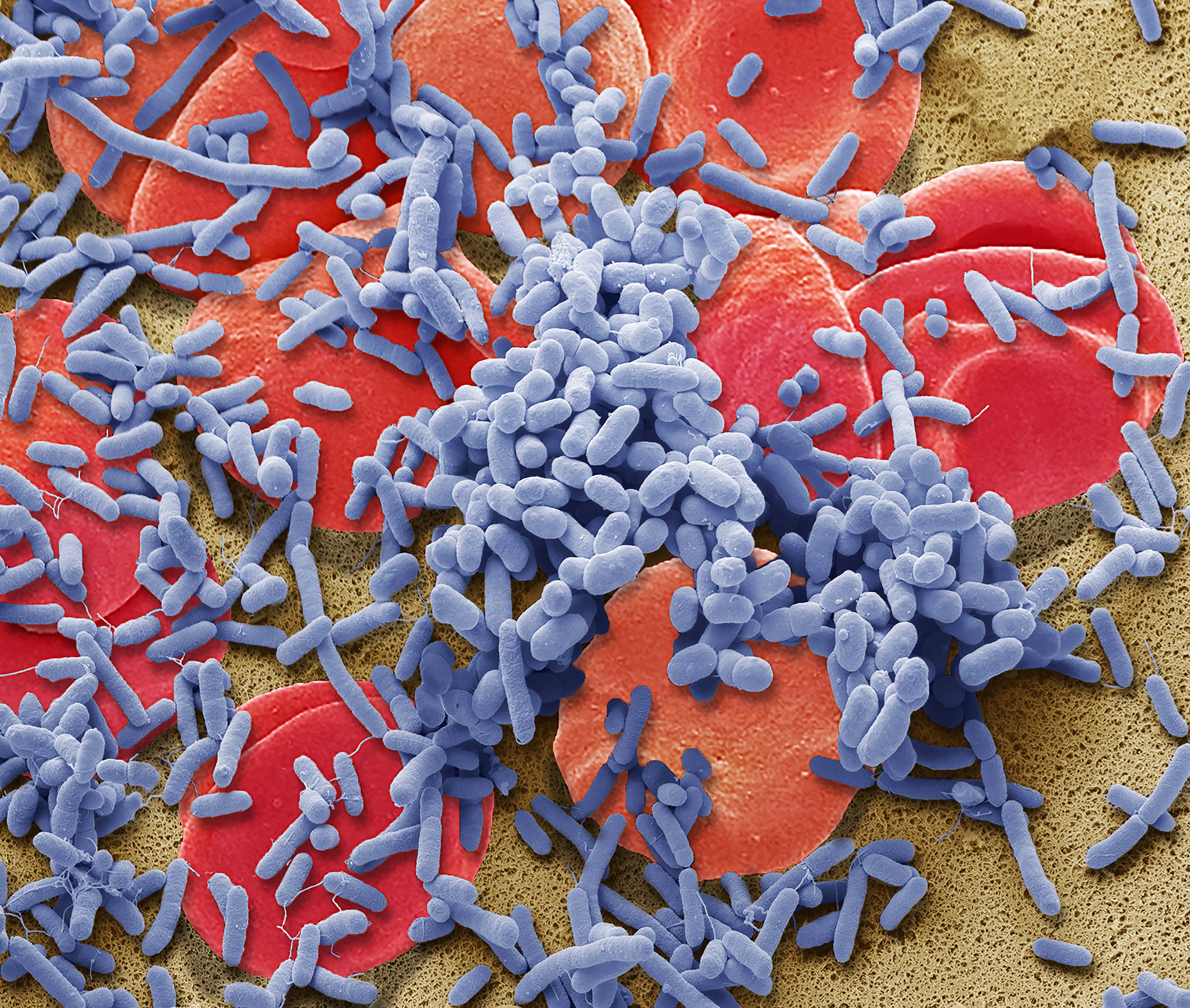

Sepsis, which happens when the body’s response to an infection spirals out of control, can lead to organ failure, limb loss and death. Roughly 1.7 million adults in the U.S. develop sepsis each year, and about 270,000 of them die, according to the Centers for Disease Control and Prevention. Although most cases originate outside the hospital, the condition is a major cause of patient mortality in this setting. Catching the problem as quickly as possible is crucial to preventing the worst outcomes. “Sepsis spirals extremely fast—like in a matter of hours if you don’t get timely treatment,” says Suchi Saria, CEO and founder of Bayesian Health, a company that develops machine-learning algorithms for medical use. “I lost my nephew to sepsis. And in his case, for instance, sepsis wasn’t suspected or detected until he was already in late stages of what’s called septic shock…, where it’s much harder to recover.”

But in a busy hospital, prompt sepsis diagnosis can be difficult. Under the current standard of care, Saria explains, a health care provider should take notice when a patient displays any two out of four sepsis warning signs, including fever and confusion. Some existing warning systems alert physicians when this happens—but many patients display at least two of the four criteria during a typical hospital stay, Saria says, adding that this can give warning programs a high false-positive rate. “A lot of these other programs have such a high false-alert rate that providers are turning off that alert without even acknowledging it,” says Karin Molander, who is an emergency medicine physician and chair of the nonprofit Sepsis Alliance and was not involved in the development of the new sepsis-detection algorithm. Because of how commonly the warning signs occur, physicians must also consider factors such as a person’s age, medical history and recent lab test results. Putting together all the relevant information takes time, however—time sepsis patients do not have.

In a well-connected electronic-records system, known sepsis risk factors are available but may take time to find. That’s where machine-learning algorithms come in. Several academic and industry groups are teaching these programs to recognize the risk factors for sepsis and other complications and to warn health care providers about which patients are in particular danger. Saria and her colleagues at Johns Hopkins University, where she directs the Machine Learning and Healthcare Lab, began work on one such algorithm in 2015. The program scanned patients’ electronic health records for factors that increase sepsis risk and combined this information with current vital signs and lab tests to create a score indicating which patients were likely to develop septic shock. A few years later, Saria founded Bayesian Health, where her team used machine learning to increase the sensitivity, accuracy and speed of their program, dubbed Targeted Real-Time Early Warning System (TREWS).

More recently, Saria and a team of researchers assessed TREWS’s performance in the real world. The program was incorporated over two years into the workflow of about 2,000 health care providers at five sites affiliated with the Johns Hopkins Medicine system, covering both well-resourced academic institutions and community hospitals. Doctors and nurses used the program in more than 760,000 encounters with patients—including more than 17,000 who developed sepsis. The results of this trial, which suggest TREWS led to earlier sepsis diagnosis and reduced mortality, are described in three papers published in npj Digital Medicine and Nature Medicine late last month.

“I think that this model for machine learning may prove as vital to sepsis care as the EKG [electrocardiogram] machine has proved in diagnosing a heart attack,” Molander says. “It is going to allow the clinician to go from the computer…, trying to analyze 15 years’ worth of information, to go back to the bedside and reassess the patient more rapidly—which is where we need to be.”

TREWS is not the first program to demonstrate its value in such trials. Mark Sendak, a physician and population health and data science lead at the Duke Institute for Health Innovation, works on a similar program developed by Duke researchers, called Sepsis Watch. He points out that other machine-learning systems focused on health care—not necessarily those created for sepsis detection in particular—have already undergone large-scale trials. One groundbreaking test of an artificial-intelligence-based system for diagnosing a complication of diabetes was designed with input from the U.S. Food and Drug Administration. Other programs have also been tested in multiple different hospital systems, he notes.

“These tools have a valuable role in improving the way that we care for patients,” Sendak says, adding that the new system “is another example of that.” He hopes to see even more studies, ideally standardized trials that involve research support and guidance from external partners, such as the FDA, who don’t have a stake in the results. This is a challenge because it is extremely difficult to design health care trials of machine-learning systems, including the new studies on TREWS. “Anything that takes an algorithm and puts it into practice and studies how it’s used and its impact is phenomenal,” he says. “And doing that in the peer-reviewed literature—massive kudos.”

As an emergency room physician, Molander was impressed by the fact that the AI does not make sepsis decisions on behalf of health care providers. Instead it flags a patient’s electronic health record so that when doctors or nurses check the record, they see a note that the patient is at risk of sepsis, as well as a list of reasons why. Unlike some programs, the alert system for TRWES does not prevent “the clinician from doing any other further work [on the computer] without acknowledging the alert,” Molander explains. “They have a little reminder there, off in the corner of the system, saying, ‘Look, this person is at higher risk of decompensation [organ failure] due to sepsis, and these are the reasons why we think you need to be concerned.’” This helps busy doctors and nurses prioritize which patients to check on first without removing their ability to make their own decisions. “They can choose to disagree because we don’t want to take autonomy away from the provider,” Saria says. “This is a tool to assist. This is not a tool to tell them what to do.”

The trial also gathered data on whether doctors and nurses were willing to use an alert system such as TREWS. For instance, 89 percent of its notifications were actually evaluated rather than dismissed automatically, as Molander described happening with some other systems. Health care providers’ willingness to check the program could be because TREWS cut the high rate of false sepsis-warning notifications by a factor of 10, according to a press release from Bayesian Health, reducing the barrage of alerts and making it easier to distinguish which patients were in real danger. “That’s mind-blowing,” Molander says. “That is really important because it allows providers to increase their trust in machine learning.”

Building trust is important, but so is collecting evidence. Health care institutions would not be likely to accept machine-learning systems without proof they work well. “In tech, people are much more willing to adopt new ideas if they believe in the thought process. But in medicine, you really need rigorous data and prospective studies to support the claim to get scalable adoption,” Saria says.

“In some sense, we are building the products while also building the evidence base and the standards for how the work needs to be conducted and how potential adopters need to be scrutinizing the tools that we’re building,” Sendak says. Achieving widespread adoption for any algorithmic alert system is challenging because different hospitals may use different electronic-records software or may already have a competing system in place. Many hospitals also have limited resources, which makes it difficult for them to assess the effectiveness of an algorithmic alert tool—or to access technical support when such systems inevitably require repairs, updates or troubleshooting.

Still, Saria hopes to use the new trial data to expand the use of TREWS. She says she is building partnerships with multiple electronic-records companies so she can incorporate the algorithm into more hospital systems. She also wants to explore whether machine-learning algorithms could warn about other complications people can experience in hospitals. For instance, some patients must be monitored for cardiac arrest, heavy bleeding and bedsores, which can impact health during hospital stays and recuperation afterward.

“We’ve had a lot of learning around what ‘AI done right’ looks like, and we’ve published significantly on it. But what this is now showing is AI done right actually gets provider adoption,” Saria says. By incorporating an AI program into existing records systems, where it can become part of a health care provider’s workflow, “you can suddenly start chopping your way through all these preventable harms in order to improve outcomes—which benefits the system, benefits the patient and benefits the clinicians.”

Stay connected with us on social media platform for instant update click here to join our Twitter, & Facebook

We are now on Telegram. Click here to join our channel (@TechiUpdate) and stay updated with the latest Technology headlines.

For all the latest For News Update Click Here